One day, just like Black Friday, the traffic of your application exceeds the usual amount of requests, and the running web server’s containers cannot handle these many requests. The worst thing ever happens, Denial of Service! Your service is no longer available... To avoid DoS, you need the right framework to autoscale virtual machines and containers according to your application's demands.

Autoscaling-related components for Kubernetes

In general, K8s is able to scale, the listed components all “auto scale” something, but are completely unrelated to each other (developed in separate Kubernetes projects) and address different use cases and concepts.

Cluster Autoscaler

adjusts the size of a Kubernetes Cluster

Vertical Pod Autoscaler

adjust the amount of CPU and memory requested by pod/cluster

Addon Resizer - beta

modifies resource requests of a deployment based on the number of nodes

Extended control over autoscaling, deployment and security

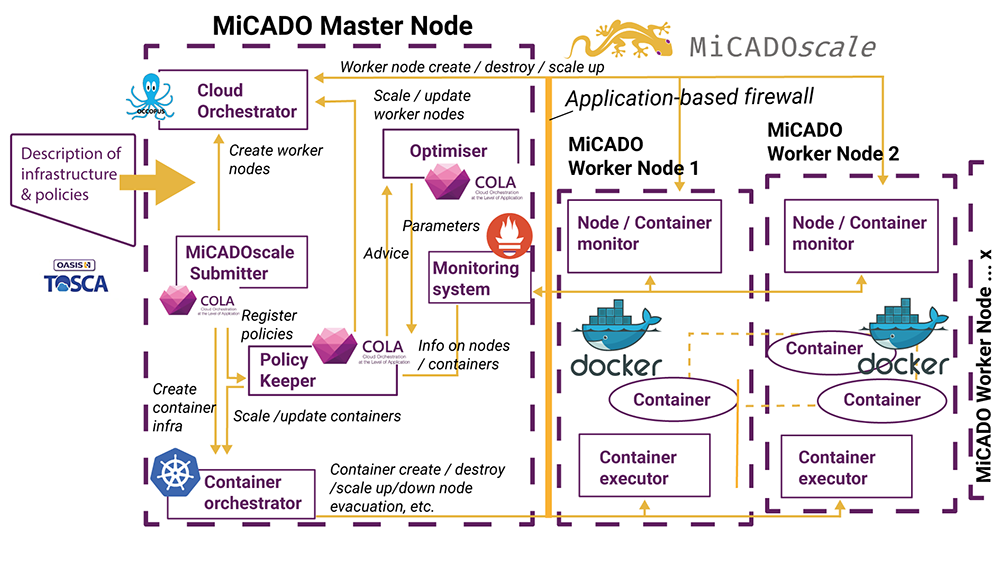

MiCADOscale exaggerates the functionalities of K8s and other tools - the framework unites outstanding tools and it's capabilities, like K8s, Prometheus, Terraform and monitor, orchestrate various specific custom-defined scaling parameters and security policies.

Building upon K8s, MiCADOscale allows extending the configurability of autoscaling functionalities of containers and virtual machines, while respecting your individual and public compliance regulations, like the general data protection regulations of the European Union.

Flexible Portability of application and policies

Necessary data is described in an ADT and th open-source autoscaling and orchestration framework portable scaling parameters/policies can be defined on virtual machines as well as on the application levels. At virtual machine (VM) level, a built-in Kubernetes cluster is dynamically extended or reduced by adding/removing virtual machines. At the Kubernetes level, the number of replicas tied to a specific Kubernetes Deployment can be increased/decreased.

Do you have questions?

Contact us.